As the digital landscape shifts toward greater privacy, understanding and managing “reporting noise” is becoming more important for marketers. With Google’s Privacy Sandbox and the phasing out of Google Advertising Identifier (GAID) in the Android ecosystem, navigating this new environment is critical for maintaining accurate insights and optimizing campaigns. Here’s a closer look at how these changes will impact marketers and actionable steps to help you adapt to this new approach.

What is noise in the Privacy Sandbox?

Imagine a colorful mosaic, where each tile represents an individual user’s data. To protect each tile’s unique pattern, we sprinkle sand over the mosaic. This sand acts as “noise” to safeguard individual information, or tile patterns. In a small mosaic, the sand easily obscures specific details. However, in a larger mosaic, the same amount of sand only slightly covers the tiles, maintaining the overall pattern’s clarity while protecting individual privacy.

This analogy illustrates how noise functions in the Privacy Sandbox. When data is less aggregated, like the small mosaic, noise has a more significant impact, reducing clarity. Conversely, highly aggregated data, like in the larger mosaic, is less affected by the noise, preserving user privacy while still allowing for more meaningful insights. For a deeper dive into how noise operates, check out our conversation with Google privacy experts.

Effectively navigating noise in attribution reports

The key takeaway is that higher data aggregation minimizes the impact of noise. However, the challenge lies in balancing data aggregation with the need for timely, granular reports. As Branch developed designs for this next-generation analytics experience, we identified the critical need for a noise management system to help marketers navigate this complexity.

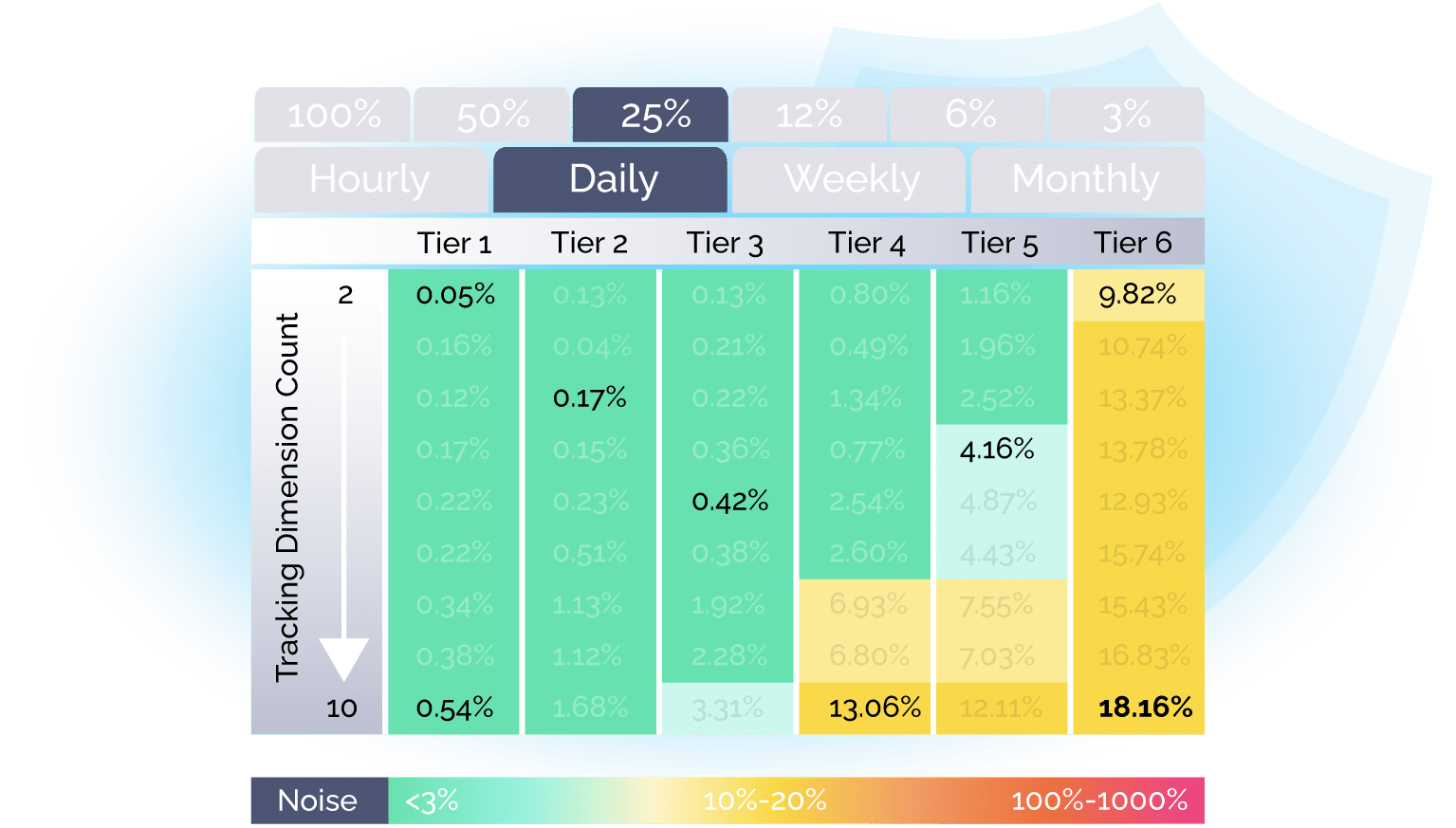

We use ”variation” as a metric for noise, representing the percentage difference between actual and reported numbers. For example, if the actual install count is 100, but the “noised” report shows 50, the variation would be 50%. This metric helps us understand the impact noise has in our reports and determine whether campaign performance is comparable.

Apps with lower advertising traffic are more susceptible to noise, meaning the reported metrics may vary more significantly from the actual figures. To address this, we’ve developed a tiered system that categorizes apps based on their daily install traffic driven by ads. This allows us to tailor noise management strategies to the unique needs of each app, ensuring more accurate reporting. The tiers are defined as follows:

- Tier 1: > 100,000 daily installs

- Tier 2: 50,000-100,000 daily installs

- Tier 3: 10,000-50,000 daily installs

- Tier 4: 1,000-10,000 daily installs

- Tier 5: 100-1,000 daily installs

- Tier 6: < 100 daily installs

Each tier reflects different levels of noise susceptibility, with lower tiers (fewer installs) being more prone to noise. By knowing where your app falls within this tiered system, you can understand how we will apply noise management techniques to maintain accurate and reliable reporting.

Branch learnings on noise management

Navigating noise in the new Privacy Sandbox environment requires a strategic approach to ensure accurate and actionable insights. Through our research and simulations, we’ve identified several critical factors that influence how noise impacts reporting. Below are the key learnings that we use to surface noise diagnostics, which in turn will inform your campaign planning and optimization.

-

- The more granular the tracking dimensions, the more noise affects the data. For example, tracking installs at the country level might show 100 installs from the U.S. However, when tracking at the city level (e.g., San Francisco), you might only see 10 installs. Based on our simulations, we recommend tracking no more than seven dimensions to maintain manageable noise levels. Branch allows customization of tracking dimensions with noise level references to balance granularity and noise impact.

- Timeliness of reports also affects noise. A weekly report might aggregate 5,000 installs, while a daily report might show 1,000, and an hourly report only 10. For apps with more than 10,000 daily installs driven by ads, daily reports remain reliable, and accurate hourly reports are possible with fewer than five dimensions. For apps with fewer than 10,000 daily installs, limiting dimensions to fewer than five is crucial for high accuracy in daily reports.

- Customizing measurement goals enhances accuracy. Different marketers prioritize varying measurement goals, whether it’s total install count, in-app conversions, or revenue. To accommodate these diverse objectives, Branch allows you to customize these goals and assign different weights of accuracy to each. This ensures that the most precise measurement is applied where it matters most for your unique business demands, enabling you to focus on the metrics that drive your success.

Practical application: Noise simulations

Let’s focus on one metric to dig into some real test results: total install count. Below are the results of a real noise simulation in the Privacy Sandbox where we measured the accuracy of total install count across different time frames such as hourly, daily, or weekly.

For Tier 1 apps (100,000+ daily installs), tracking across just two dimensions like country and platform reveals a noise level of 0.05%. However, if you increase the reporting dimensions to 10 — imagine you’re bolstering your report with columns like city, device type, and user acquisition source — the noise only rose to 0.54%, still less than 1%.. This highlights just how resilient to noise large campaign volumes can be.

On the other end of the spectrum, in Tier 6 apps (fewer than 100 daily installs), noise becomes much more significant. With a simple, two-dimension report, we already see noise reaching 9.82% and doubling to 18.16% with 10 dimensions.

When the time ultimately comes that attribution for users on Android will rely on Privacy Sandbox systems exclusively, you’ll need a performance measurement partner like Branch that has invested significantly in research and development on noise impacts. Branch Performance analytics will surface the key insights you will need to make informed decisions on structuring reports and optimizing strategies.

If you’re interested in further results of simulations we’ve run beyond the one featured below (such as the hourly, weekly, or monthly), reach out to us.

Embracing the Privacy Sandbox

The introduction of noise under the Privacy Sandbox is a part of a larger shift toward a user privacy-focused digital marketing ecosystem. Branch is committed to delivering comprehensive and accurate performance measurement, with a strong focus on privacy-compliant attribution, empowering marketers and digital businesses to thrive in an ever-evolving privacy landscape. As we continue to collaborate with the Google Privacy Sandbox team, we’ll keep sharing practical insights and updates. Stay tuned to our latest posts for more in-depth learnings and strategies.